Install the NVIDIA CUDA toolkit on GPU Linodes

To take advantage of the powerful parallel processing capabilities offered by GPU Linodes equipped with NVIDIA Quadro RTX cards, you first need to install NVIDIA's CUDA Toolkit. This guide walks you through deploying a GPU Linode and installing the CUDA Toolkit.

-

Deploy a GPU Linode using Cloud Manager, the Linode CLI, or the Linode API. It's recommended to follow the instructions within the following guides:

Be sure to select a distribution that's compatible with the NVIDIA CUDA Toolkit. Review NVIDIA's System requirements to learn which distributions are supported.

-

Upgrade your system and install the kernel headers and development packages for your distribution. See NVIDIA's Pre-installation actions for additional information.

-

Ubuntu and Debian

sudo apt update && sudo apt upgrade sudo apt install build-essential linux-headers-$(uname -r) -

CentOS/RHEL 8, AlmaLinux 8, Rocky Linux 8, and Fedora

sudo dnf upgrade sudo dnf install gcc kernel-devel-$(uname -r) kernel-headers-$(uname -r) -

CentOS/RHEL 7

sudo yum update sudo yum install gcc kernel-devel-$(uname -r) kernel-headers-$(uname -r)

-

-

Install the NVIDIA CUDA Toolkit software that corresponds to your distribution.

-

Navigate to the NVIDIA CUDA Toolkit Download page. This page provides the installation instructions for the latest version of the CUDA Toolkit.

-

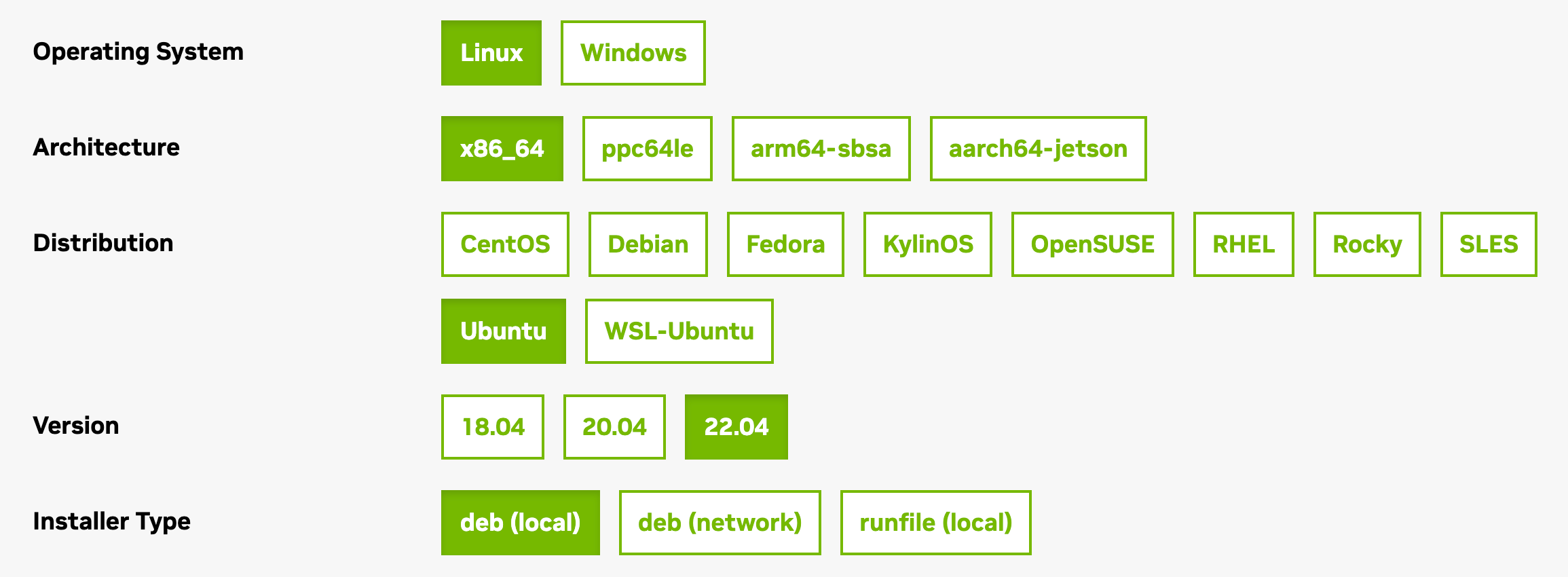

Under the Select Target Platform section, choose the following options:

-

Operating System: Linux

-

Architecture: x86_64

-

Distribution: Select the distribution you have installed on your GPU Linode (such as Ubuntu).

-

Version: Select the distribution version that's installed (such as 22.04).

-

Installer Type: Select from one of the following methods:

-

rpm (local) or deb (local): Stand-alone installer that contains dependencies. This is a much larger initial download size but is recommended for most users.

-

rpm (network) or deb (network): Smaller initial download size as dependencies are managed separately through the package management system. Some distributions may not contain the dependencies needed and you may receive an error when installing the CUDA package.

-

runfile (local): Installs the software outside of your package management system, which is typically not desired or recommended.

If you decide to use the runfile installation method, you may need to install gcc and other dependencies before running the installer file. In addition, you also need to disable any existing nouveau drivers that installed on most distributions by default. The runfile method is not covered in this guide. Instead, reference NVIDIA's runfile installation instructions for Ubuntu, Debian, CentOS, Fedora, or openSUSE.

-

-

-

The Download Installer (or similar) section should appear and display a list of commands needed to download and install the CUDA Toolkit. Run each command listed there.

-

Reboot the GPU Linode after all the commands have completed successfully.

-

Run

nvidia-smito verify that the NVIDIA drivers and CUDA Toolkit are installed successfully. This command should output details about the driver version, CUDA version, and the GPU itself.

-

-

You should now be ready to run your CUDA-optimized workloads. You can optionally download NVIDIA's CUDA code samples and review CUDA's Programming Guide to learn more about developing software to take advantage of a GPU Linode.

Optional: After you have completed the installation, you can capture a custom image of the Linode and use it the next time you need to deploy a GPU Linode.

NVIDIA documentation

-

CUDA FAQ: NVIDIA's FAQ resource for the CUDA platform.

-

NVIDIA CUDA Installation Guide for Linux: The main installation guide for installing the CUDA Toolkit.

-

CUDA Samples: Code samples demonstrating features found within the CUDA Toolkit.

-

CUDA C++ Best Practices Guide: Discusses the best practices that developers can implement to get the most out of GPU Linodes.

-

CUDA C++ Programming Guide: Provides details on how to develop programs that target GPUs.

Updated 8 months ago