Geographic replication impacts transfers

The replica that is best-accessible to your current network is typically the target for uploads of content. After a successful upload, the other replica is informed of the update, and that same content is replicated to it.

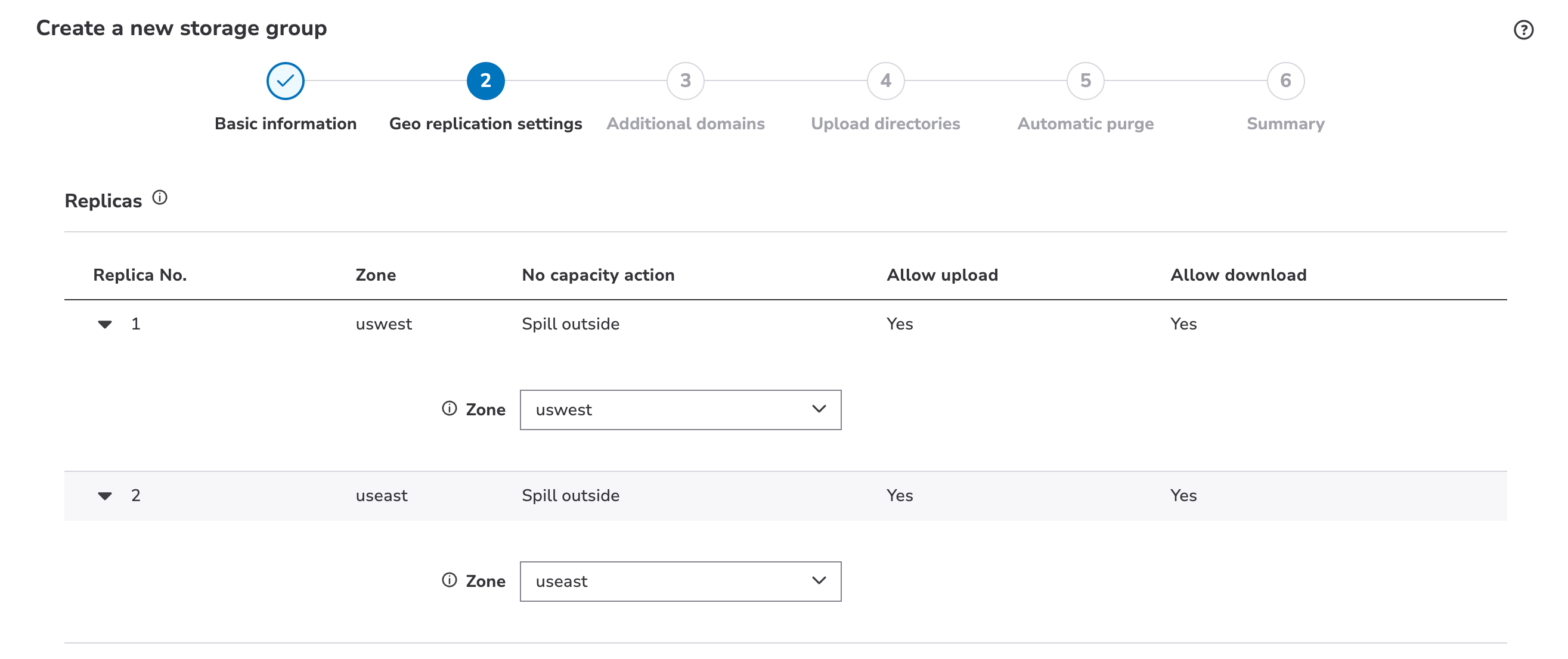

During configuration of a storage group, you set up at least two replicas of your content and select their geographic locations (Zones).

Download requests don't target a specific replica. Requests are DNS-load balanced to the best-accessible replica, based on the requesting client. The storage group's unique hostname is incorporated into your download requests, and the system determines which replica is best-accessible, at the time of the request. A request is rerouted to another replica if either of the following occur:

- The targeted replica is unavailable

- The targeted replica has been informed by the system that it does not have the latest content

This allows for constant availability of your content to customers. As an example, if you’re in Los Angeles, your upload target replica typically would be the Western United States ("uswest") and another could be the Eastern United States ("useast"). Various configurations can be applied to best suit delivery to your end users. Talk to your account representative about a scenario that works best for you.

The "Eventual Consistency" model

Replication between your replicas is governed by what is known as the Eventual Consistency Model. Put simply, your replica locations for content in NetStorage eventually reach a globally consistent and stable state, if changes do not continue to occur.

If the change rate for an object is faster than the normal latency through the system, understand that updates may not appear at the same time, or may even appear to be skipped, if repeated change is occurring for the object. For example, eventual consistency will be delayed—or never reached—if multiple uploads of an asset continue to occur in rapid succession.

NetStorage is designed for safe replication and geographical dispersion. It is not designed as a real-time system. It is important that you keep this model in mind when developing or applying use cases in NetStorage.

Factors that can impact Eventual Consistency

Replication may result in a lag in availability

Often, a small eventual consistency lag may occur between an upload to one NetStorage location, and that object's availability from another replica. File size has no impact on this lag. As long as the file is available at one NetStorage replica, all edge servers can have access to it. The number of content changes made, and the geographic distance between your replicas can impact this lag. For example, the lag may be slightly longer for replicas that are further apart.

Multiple simultaneous logins can affect replication

Your performance can suffer if simultaneous uploads are performed all to the same storage group, from different upload locations. Be aware, the number of simultaneous logins by a given upload account user name is limited to approximately 90 connections.

When multiple sessions are used to manage the same files, it is likely that a file uploads first but finishes last. During that timeframe another upload for the same file might have started and already completed uploading. This leads to a new file being overwritten by an old one. For more information, contact your account representative.

An upload that starts second but finishes first gets written to disk while the other session is discarded with a 409 response code. This ensures that only the freshest copy is preserved.

Eventual Consistency and deletion of content

Deleting content follows the Eventual Consistency model and requires propagation to all replicas. When you delete an object, each replica removes its content and stops forwarding requests to other replicas that may still have the content. Data is no longer accessible for new downloads when all replicas have acknowledged the deletion. Downloads already in-progress may successfully accomplish their transfer.

NetStorage deletion occurs for most workflows in 10 minutes. Downstream caching and your delivery configuration will add delays specific to your configuration.

Deletion is permanent and recovery services for accidental deletion isn't available.

Workarounds for Eventual Consistency

These questions address scenarios that can be affected by the eventual consistency model, and offers some suggested workarounds.

Objects that change frequently

This applies to objects that change more than once an hour.

- HTML pages used for real-time purposes such as news, sports or signaling.

- For Live Streaming manifest files that must change on every new segment published, you should not use NetStorage, directly. Our Media Services Live product offerings are better suited for this. They indirectly incorporate NetStorage into their workflow. Contact your account representative for details on these products.

For upload sources that are geographically spread out

If so, be aware that one of your content management clients—but not a delivery client—may see a different view than another. If a more consistent view is required, consider having one of your replicas set up to accept uploads, only if the primary is currently down. You can have it serve as a "failover replica."

There is a penalty to this, though: All uploads target the primary replica for uploads, and users that are actually closer to the failover replica may experience decreased performance.

Avoid delivering stale content from a CDN

The best practice is to use new origin object names whenever possible. For example, use version numbers in your filenames or paths. Try to avoid simply uploading the same object name and overwriting it. Then, update links on your web server or CMS accordingly, and finally delete the old object. With this method, you don't need to purge your CDN.

How to use the same file and path name schemes

If you do re-upload changed objects under the same object name, consider the following:

- Wait some time after uploading, before you issue a CDN purge. This reduces the probability of re-caching the old content from replicas that still return the old copy. Typically, this wait requirement should only be a few seconds, but only if no other objects were recently uploaded to that storage group.

- Use a lower CDN Time to Live (TTL) for files known to require updates without URL changes. However, the use of a low TTL on cache settings is no substitute for properly engineering an application to be tolerant of infrastructure delays. In fact, TTL settings less than the average latency contribute additional, unnecessary traffic which can slow updates beyond what would naturally be present. To accommodate, a minimum TTL is recommended. At current, the minimum supported with NetStorage is 10 minutes.

You should understand how NetStorage replaces existing content

To avoid potentially replicating partial data, NetStorage doesn't support partial updates to existing files. The table that follows gives more detail on what is supported.

| Supported | Not supported |

|---|---|

| Full replacement of existing files. When targeting an existing object, the entire object will be completely overwritten. This occurs first in the target replica, and eventually later in the other replica. Success is reported to the uploading client after completion of the upload to the first replica. The eventual consistency model always applies. | Partial updates to existing files. This includes operations such as resumption of interrupted file uploads, partial overwrites, and seek and write. Even though some of the client software and access methods described in this document allow the resumption of interrupted file uploads, NetStorage itself does not support it. This is in place to eliminate the possibility of inadvertently replicating partially uploaded content that is accessible to end users. |

Updated 12 months ago