Key capabilities

IP Accelerator relies on four key capabilities to boost application performance while maintaining the security of the transmitted data. These are: dynamic mapping, transport protocol optimization, packet replication, and route optimization.

Dynamic mapping

Information gathered through real-time monitoring of Internet conditions and server loads is used to ensure that IP Accelerator end users are directed to the edge server that can best service their requests. As Internet or load conditions change, the dynamic nature of the IP Accelerator’s mapping system ensures that end users are always connected to the optimal edge server.

Transport protocol optimization

TCP performance is adversely impacted by the distance between connections. IP Accelerator applies several techniques to “shorten” the distance between endpoints.

First, it segments the TCP transmission into three segments:

- Segment 1: Between the end user and an Akamai edge server

- Segment 2: Between two Akamai edge servers

- Segment 3: Between the edge server and the customer’s origin site

Once a customer’s traffic reaches the Akamai network (segment 2), Akamai applies proprietary optimization techniques to reuse TCP connections, reducing the overhead associated with the setup and teardown of connections. In addition, IP Accelerator tunes TCP parameters to take further advantage of the shorter edge segments.

The key TCP parameters are:

- TCP Slow Start. By default, TCP initiates communication with a slow transfer rate because it knows nothing about bandwidth or latency issues. As network conditions permit, TCP gradually increases the size of the transmission window, sending a greater number of packets between TCP acknowledgments. Since IP Accelerator has a comprehensive understanding of network conditions, it can avoid TCP slow start and initiate transfers with a much larger transmission window.

- TCP Timeout and Loss Recovery. When a TCP acknowledgment is not received from the destination in a timely fashion, the sender must retransmit the lost packets. By default, TCP’s decision to retransmit is based on elapsed time and does not take other factors into account, such as the size of the transmission window or the distance between origin and endpoints. Again, IP Accelerator benefits from its intimate knowledge of network conditions, initiating more aggressive retransmits as appropriate. Additionally, when data has to be retransmitted, IP Accelerator can once again bypass slow start.

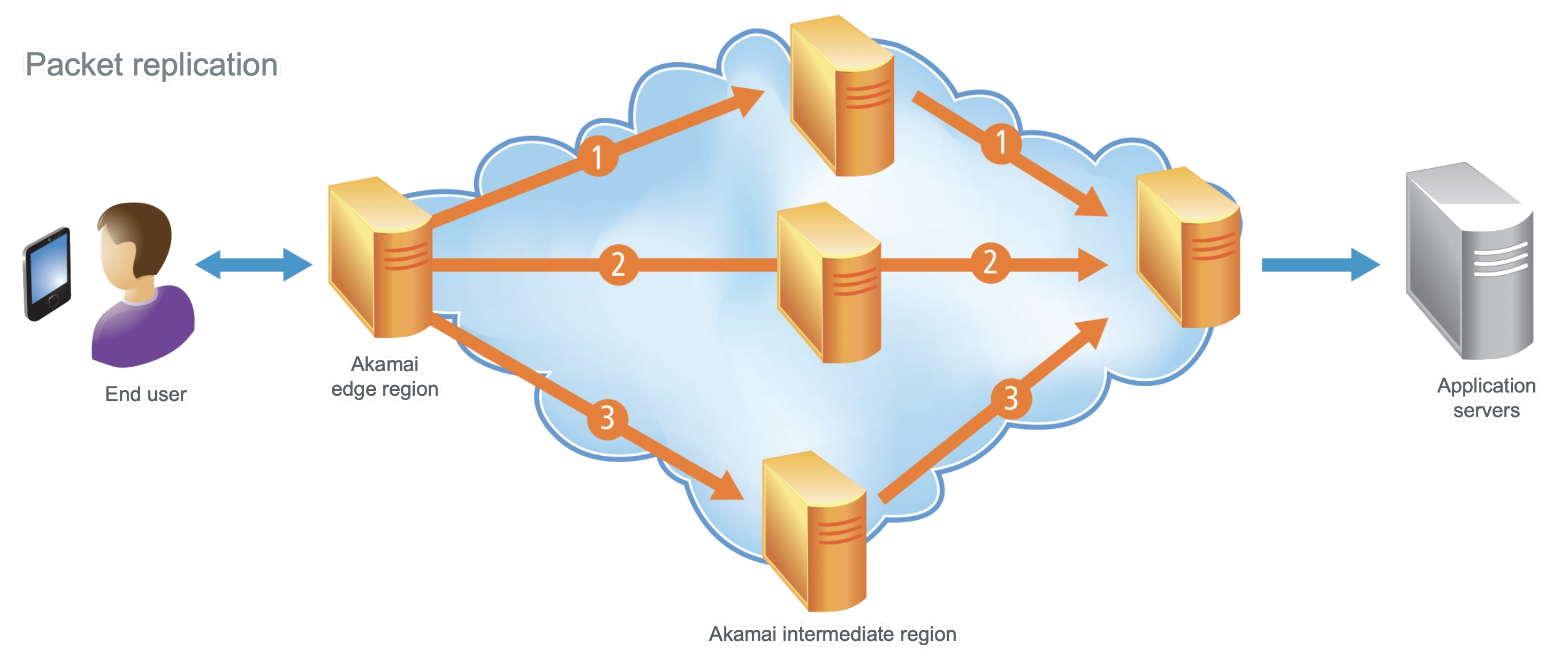

Packet replication

Application performance is adversely affected by packet loss, which is particularly common when traffic traverses international network paths. IP Accelerator protects against packet loss (and improves web application availability) by replicating packets and transmitting them along different network paths.

When a user request is received by the optimal edge server, the packets are replicated and sent along up to three different network paths. An Akamai server that resides near the customer site (outside the firewall) receives the multiple packet streams, discards duplicate packets, and reconstructs a single stream. Similarly, packets sent by the application server are replicated and transmitted along different paths back to the edge server. There, duplicate packets are discarded and a single reconstructed traffic stream is sent to the end user.

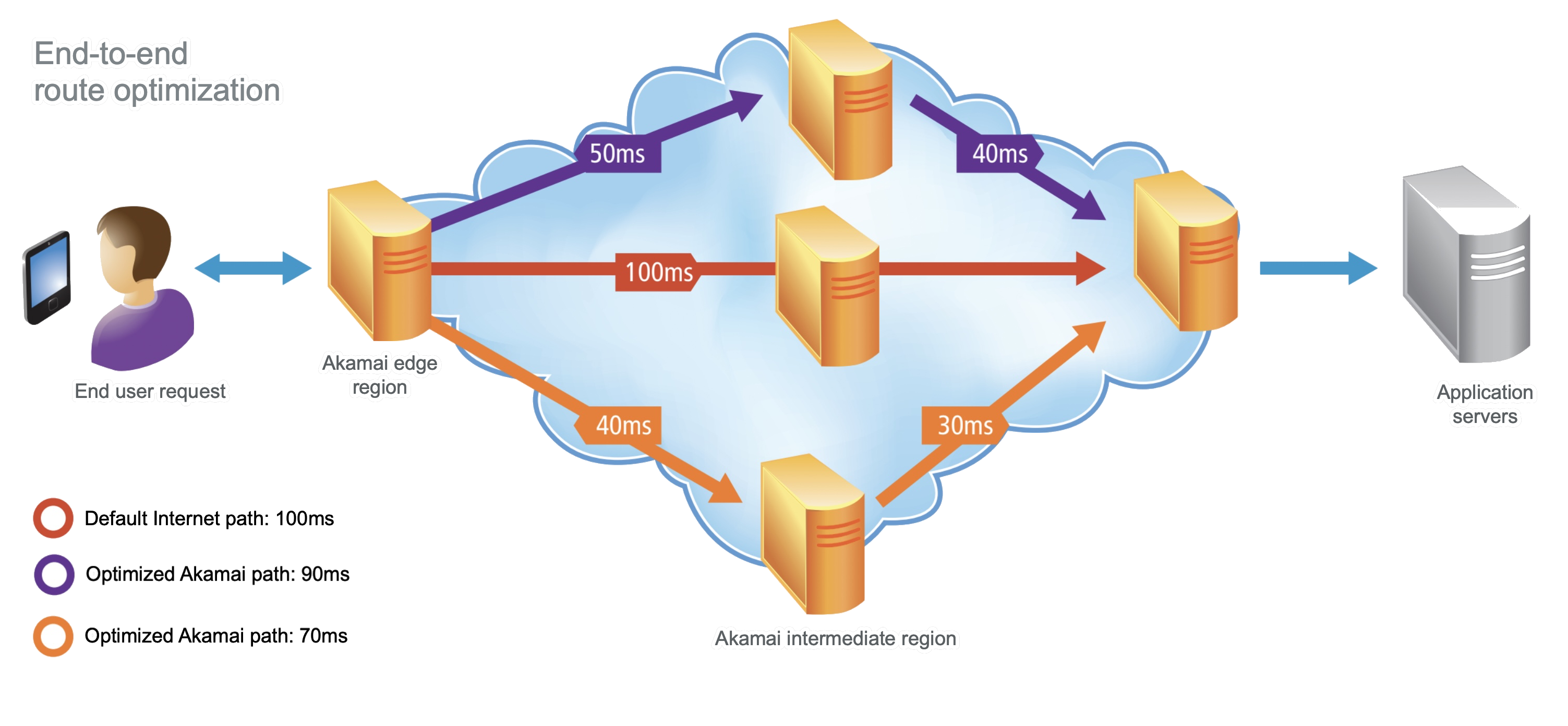

Route optimization

Akamai’s SureRoute feature addresses one of the major limitations of Border Gateway Protocol (BGP), the protocol that determines how traffic is routed across the Internet. This is the same technology used in our web delivery products

BGP employs an algorithm that looks for the shortest number of hops between endpoints to determine a route. However, BGP gives no consideration to performance, often sending data through heavily congested routes. This increases the likelihood of latency and packet loss as well as degraded application performance.

To address this, Akamai’s SureRoute feature leverages Akamai’s global network and scale. SureRoute identifies alternate communication paths between the end user and the application server, and continually assesses the performance of these routes to ensure that traffic is sent along the fastest path. These alternate paths provide a fail-over capability should the primary path encounter congestion or network failure.

Updated about 3 hours ago